Semantic layers in AI

Cindi Howson at ThoughtSpot proposes an antidote to AI hallucinations

Many organisations want Generative AI (GenAI) for its ability to transform industries and automate repetitive enterprise tasks. However, AI hallucinations often scare enterprises into slower adoption and more narrow scope.

While they may not be able to fully eradicate hallucinations, organisations can significantly minimise them with more data and more context built into their AI systems. One re-emerging solution for this lies in semantic layers, which can be vital to minimising hallucinations and ensuring greater accuracy of answers in structured data sources.

What is a hallucination and why do they happen?

A hallucination takes place when a large language model (LLM) gives nonsensical answers to queries, or adlibs in its response.

LLMs are trained on vast amounts of data. Ask them a question and they will suggest the next word or phrase based on the training data set. Less context reduces the likelihood of accurate responses. For example, you could ask an LLM to finish the sentence: “See Rover…” If it knew that Rover was a dog, it would likely fill in the blank with “run,” which would be correct much of the time.

But context changes everything, and surrounding words help inform the LLM. If the question was, “what does Rover do at the park?” The LLM might answer with “chase the ball.” But does the LLM know that Rover is a dog? If so, does it infer this from the specific questions, or from the training data where “Rover” is most often mentioned as a dog?

This illustrates the ongoing debate: are LLMs genuinely reasoning, or are they simply improving at predicting word patterns in a way that appears to be reasoning?

To data, data and analytics has been entirely deterministic. There can only be one correct answer. With GenAI, we are entering a phase of probabilistic AI.

For example, Liverpool won 25 games in the 2024/25 Premier League season. There is only one correct answer. But predicting the goals in their next game is probabilistic. Based on season averages, the LLM might say two. However, the more data and context the user can feed the LLM with - such as Liverpool’s next opponent, player injury reports, or weather - the more accurately it can predict the score of the upcoming game.

In 2023, someone asked, “how do I make cheese stick to pizza?” The LLM answered, “use non-toxic glue.” Unfortunately, this was not a hallucination. The answer came from a Reddit post that trained the LLM. To avoid this, sources can be weighted in LLM training. Alternatively, the user could give more context: “How do I make cheese stick to pizza using only food ingredients and cooking techniques?”

Each of these examples cause hallucinations in their own way. The fix lies in more data and context, using as much information as possible to better inform LLMs and reduce hallucinations.

LLMs on corporate enterprise data

Initial use cases for GenAI centred around asking questions about data in the public domain. These LLMs were not trained on a company’s internal data. As a result, chatbots could answer questions around popular travel destinations, but couldn’t give information on a company’s top customers.

Further LLMs can be trained on semi-structured data, such as text, PDFs, images, source code, but not on structured data stored in business applications and data lakes. However, CEOs are now asking why they can’t turn to ChatGPT for their company’s data.

Some organisations have tried to build their own chat agents, based on a highly curated data set and predefined questions. The user interface might be appealing, but the scope is too limiting to be broadly useful.

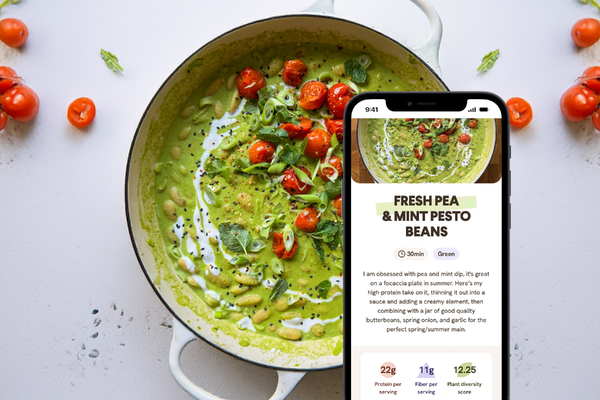

Instead, a well-defined semantic layer that sits between the LLM and the corporate data lake can make the system work. The semantic layer provides the LLM with the context to recognise that “top customers” could relate to sales revenue, quantity sold or customer tenure.

What are semantic layers?

Semantic layers are not a new concept and have existed since the early days of business intelligence in the early 1990s. In its simplest form, a semantic layer provides a business view of the data, hiding the complexities and often obtuse naming of physical tables in a data store. Instead of “VBAP.L33” in the source system and data lake, the semantic layer would represent this as “customer name.” Semantic layers are created to promote both re-usability and to enable self-service analytics.

Semantic layers vary: They can simply remap column names, or they can support complex dimensional models, multiple fact tables, optimal query execution, descriptions and data lineage. Importantly, they give the LLM context to ensure greater accuracy and fewer hallucinations.

One Semantic Layer to Rule Them All

Given the importance of semantic layers in ensuring trusted answers and reducing AI hallucinations, a single semantic layer for all analytics and AI applications would significantly streamline an organisation’s operations.

However, every application has its own semantic layer with varying levels of maturity and robustness. While efforts are underway to allow different semantic layers to interoperate, for now, enterprises should be pragmatic in evaluating semantic layers. They must recognise that this requires a combination of data governance and semantic tooling.

When evaluating semantic layers, start by ensuring a robust data governance programme is in place. This will help to unite business and technical teams with clear data ownership and shared definitions. From there, consider the fundamentals; how many data platforms it supports, how broadly it connects analytics, business intelligence and AI platforms, and whether synchronisation works in both directions.

Finally, consider the user interface for data modeling, and whether AI can enrich definitions based on other data sources such as employee training manuals and confluence pages.

While semantic layers are an increasingly critical ingredient to battle hallucinations, organisations must also ensure workers are AI literate to understand the risks of hallucinations and the differences between deterministic and probabilistic AI.

To effectively integrate GenAI, enterprises must combine robust semantic layers with clear data governance. This ensures LLMs receive the context they need to reduce hallucinations and deliver trustworthy responses. Organisations must foster AI literacy, training teams to understand both the possibilities and limitations of probabilistic AI. By prioritising these areas, companies can confidently scale their deployments while minimising risk and maximising business impact.

Cindi Howson is Chief Data & AI Strategy Officer at ThoughtSpot

Main image courtesy of iStockPhoto.com and agsandrew

Business Reporter Team

You may also like

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543